Part 2 - AI regulation in the EU

This is Part 2 of 'Regulation of AI'

AI regulation in the EU has been codified under the EU AI Act, Regulation 2024/1689. Key details are set out below.

|

Overview |

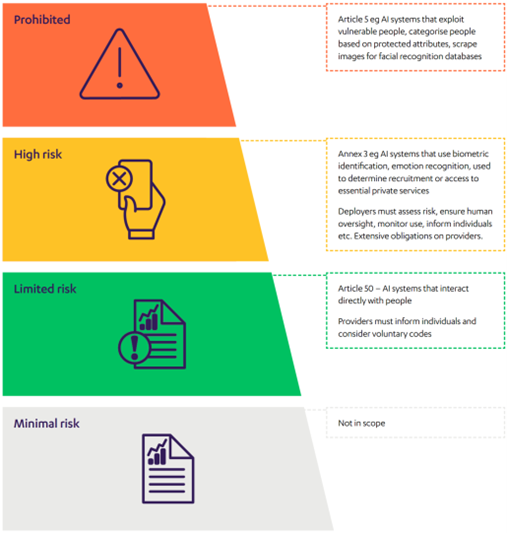

The AI Act is based on a risk framework. The intention is to achieve proportionality by setting the regulation according to the potential risk AI can generate to health, safety, fundamental rights or the environment. |

|

What does it apply to? |

The AI Act applies to AI systems defined as "a machine-based system designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment and that, for explicit or implicit objectives, infers from the input it receives, how to generate output such as predictions, content, recommendations, or decisions that can influence physical or virtual environments". |

|

Who does it apply to? |

The AI Act will apply to both public and private actors inside and outside the EU as long as the AI system is placed on the EU market or its use affects people located in the EU. It covers all entities within the AI value chain from providers through importers and distributors to deployers.

Most of the onus for compliance will fall on providers of the AI systems. However, a deployer may be subject to the obligations on a provider if:

|

|

Risk-based approach |

The AI Act takes a risk-based approach. Unacceptable risks are prohibited while those considered high risk are only permitted if they comply with certain mandatory requirements. AI systems which are neither or "limited risk" are subject to transparency requirements and providers are encouraged to create and comply with codes of conduct that adapt the high-risk AI system requirements for these lower risk use cases. See diagram below for an example of the types of AI systems that would fall within each level of risk and the implications.  For most businesses, AI systems that they intend on using are unlikely to fall within the high risk category. One exception is AI systems for recruitment which are currently readily available on the market and which many businesses are exploring. More likely to be relevant to businesses are limited risk AI systems as these cover chatbots which interact directly with people. |

|

Compliance |

Some of the obligations under the EU AI Act are vague or subject to interpretation. To address issues with implementation, the AI Act also provides for the development of harmonised standards by European standardisation organisations to flesh out requirements under the law. Organisations that comply with these standards will enjoy a legal presumption of conformity with certain elements of the AI Act. Separately, some guidance has already been published under the AI Act. In February 2025, the European Commission published draft guidelines on prohibited AI practices and, separately, draft guidelines on AI systems, in each case as defined under the AI Act. |

|

GPAI requirements |

There are additional obligations that apply to general purpose AI models (GPAI models) such as ChatGPT. Providers of GPAI must produce technical documentation to show how the AI model operates and provide information to the public about the datasets the model is trained on. Providers must also produce policies to ensure that EU copyright rules are followed. Additional rules apply where the GPAI is considered to pose systemic risks.A code of practice for GPAI is currently being produced by the European Commission. |

|

AI literacy |

Article 4 requires all businesses in scope of the EU AI Act (whether provider or deployer) to take measures to ensure a sufficient level of AI literacy in their staff irrespective of the level of risk of the AI system. The EU AI Act does not prescribe how businesses should train their staff. Article 4 is intended to apply proportionately (e.g. depending on the staff and the context AI is used in). Ultimately, training should allow businesses to make informed decisions about AI deployment. The European Commission has produced a Q&A on AI literacy and the EU AI Office has also started a living repository to provide businesses with good examples of AI literacy practices. |

|

Enforcement |

Non-compliance with certain requirements of the EU AI Act can lead to significant monetary penalties i.e. fines of up to the higher of €7.5m and 1% of global turnover, €15m and 3% of global turnover, or €35m and 7% of global turnover depending on the type of infringement. However, EU Member States must set the specific rules on penalties and enforcement measures in line with the EU AI Act and any future guidance. |

|

When do I need to comply? |

The EU AI Act came into force across all 27 EU member states on 1 August 2024. The Act provides for most requirements to apply from 2 August 2026, with certain provisions applying earlier e.g. the prohibition on use of banned AI technologies and the AI literacy requirement were effective from 2 February 2025. However, there has since been discussion as to whether implementation should be paused to allow businesses to ready themselves for compliance. |

Discover more insights on the AI guide

Stay connected and subscribe to our latest insights and views

Subscribe Here